Our lab studies Brain-Machine Interfaces (BMIs) that control upper-limb prostheses. In particular, we are interested in how the brain controls complex hand movements. The human hand has about 30 dimensions in contrast to a human arm with only 7 dimensions. BMIs that control human arms have already been demonstrated with decent accuracies. What type of interface is needed to extend the control from 7 to 37 dimensions forms the central topic of research of the lab. This question is answered from several approaches: a descending approach, studying neural recordings corresponding to human movement and behavior; an ascending approach, studying human hand kinematics, studying the nature and representation of muscle activities during hand movements. These studies involve application of linear and nonlinear signal processing, control and optimization methods.

If you are interested in discussing lab research or positions please contact Dr. Vinjamuri.

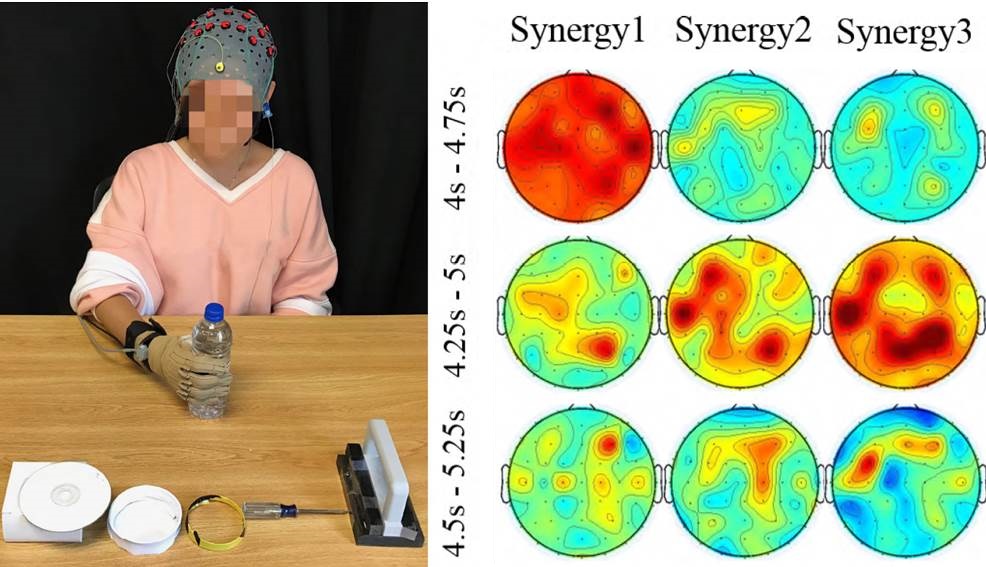

Neural Decoding of Hand Grasps

The human central nervous system (CNS) effortlessly performs complex hand movements with the control and coordination of multiple degrees of freedom. It is hypothesized that the CNS might use kinematic synergies to reduce the complexity of movements, but how these kinematic synergies are encoded in the CNS remains unclear. In order to investigate the neural representations of kinematic synergies, scalp electroencephalographic (EEG) signals and hand kinematics are recorded during representative types of hand grasping. The results show that the weights of kinematic synergies used in a particular movement reconstruction were strongly correlated to EEG features obtained from that movement. These results have promising applications in noninvasive neural control of synergy-based prostheses and exoskeletons.

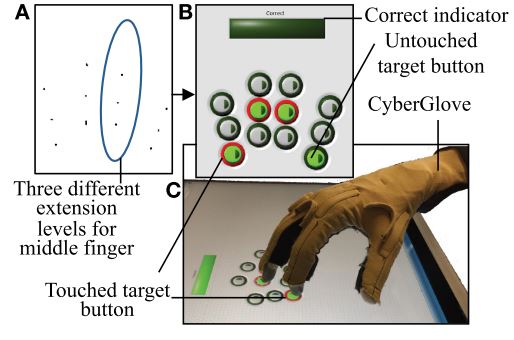

Hand Grasp Biomechanics

The foundation of our research lies in the biomechanics of how people grasp objects. By conducting human studies on reaching and grasping of objects, we gain insights into the underlying control paradigms which allow the brain to operate our highly redundant upper limbs. We use mathematical tools to derive whole-hand patterns of motion, called synergies, that can be independently controlled instead of attempting to control individual joints. Our work with these motion patterns has demonstrated results consistent with grasp preplanning and execution observed in other studies, and lends credence to the utility of synergies for creating biomimetic control systems. We have used similar methods to study arm movement during reaching, and have shown promising results for synergies as task-training tools.

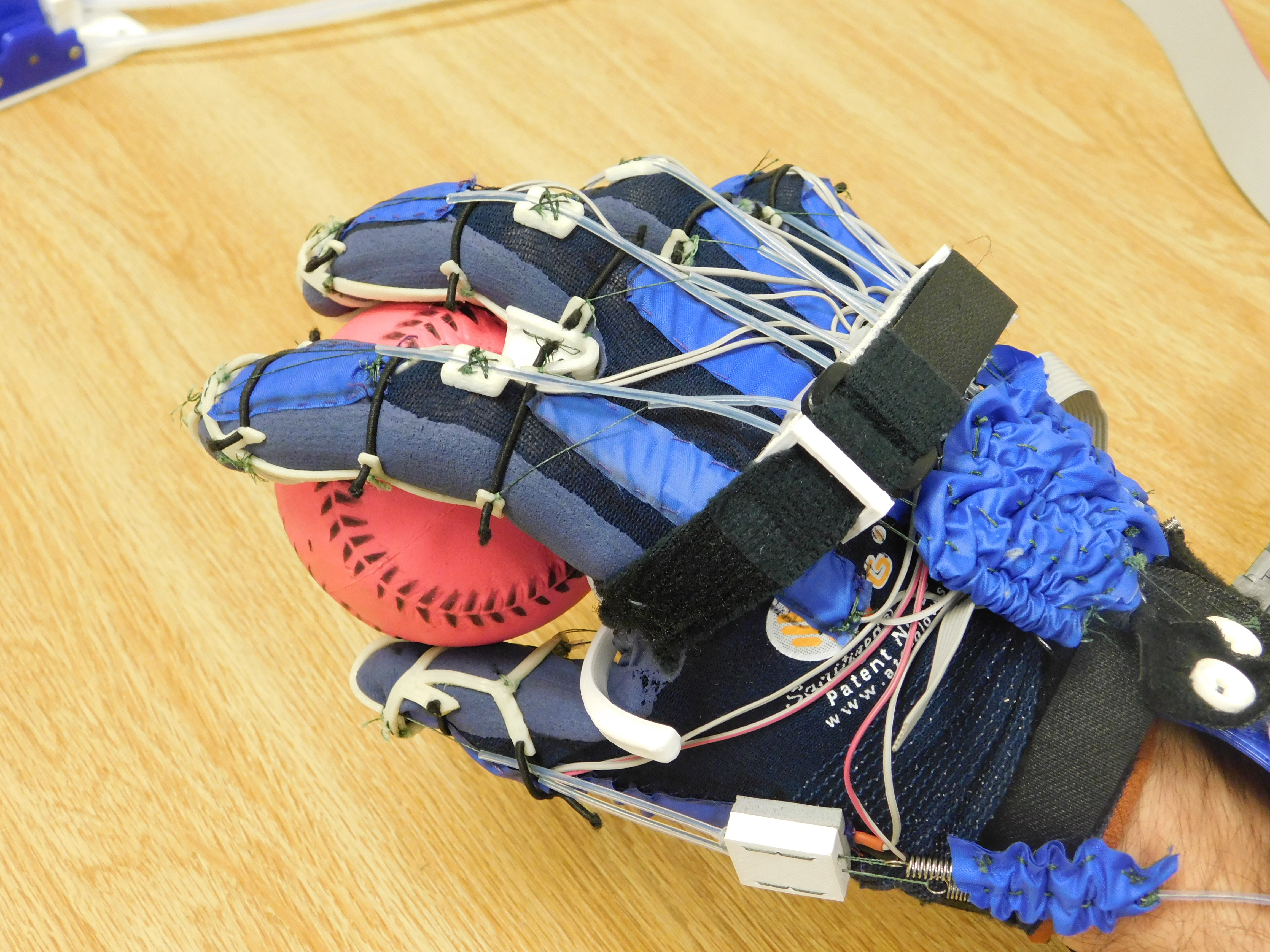

HEXOES: Hand Exoskeleton with Embedded Synergies

Hand paralysis, frequently caused by stroke, spinal cord injury, or traumatic brain injury, diminishes an individual’s ability to perform activities of daily living (ADL) and thus reduces their independence. A system that can restore their functional ability to perform ADL, either through daily assistance in grasping tasks or through hand rehabilitation, would lead to reduced rates of depression as well as increased mental, emotional, and physical wellbeing. For this purpose, we present HEXOES, the hand exoskeleton with embedded synergies. HEXOES is a soft glove-based hand exoskeleton capable of independently actuating 10 joints of the hand. A remote actuator assembly provides flexion force through a cable bundle, and springs on the dorsal side of the hand provide adjustable extension force. This system is currently being used to evaluate assistive and rehabilitative controls, and as a test bed for novel technologies in wearable robotics. In the future, we envision similar systems being used in a person’s day-to-day life to restore functional ADL, or to be used for improved rehabilitation methods which can restore a person’s natural hand function to the same effect.

Human Robot Interaction and Collaboration

Emotionally intelligent interactions between humans and robots are essential to accomplish shared tasks in collaborative processes. Robots may exploit various communication channels to recognize human emotions, including hearing, speech, sight, touch, and learning. They can then use this information to adapt their behavior in a way that is socially appropriate for humans. In this research, we focus on neurophysiological and behavioral channels of communication. We identify three emerging channels for conveying emotions in the human-robot interaction (HRI) context. These channels include (i) emotional intelligence perception, (ii) brain-computer interfaces, and (iii) human-robot collaboration through learning. Our emphasis on these three areas is driven by the crucial role of emotional intelligence in human-robot interactions.

Neurotechnologies for Substance Use Disorder

Despite the scientific and technological advances in the areas related to brain and behavior, there are limited devices and interventions available to help individuals with substance use disorder (SUD). The overall goal of this study is to develop an integrated system that can manage SUD using wearable sensors by detecting and managing substance use triggers, specifically stress. This system will have four major components : (1) Detection of stress and emotion recorded using electrodermal activity (EDA) and electroencephalography (EEG) sensors (2) Machine-learning and artificial intelligence algorithms for improving the detection and estimation of stress (3) Neurofeedback using EEG sensors to measure, manage and modify the brain activity and thus behavior, and (4) A smartphone App that can provide a user-friendly graphical user interface. The proposed technology with its unique ability to influence the brain and the behavior will impact the individuals with this serious epidemic in a most immediate and personal manner.

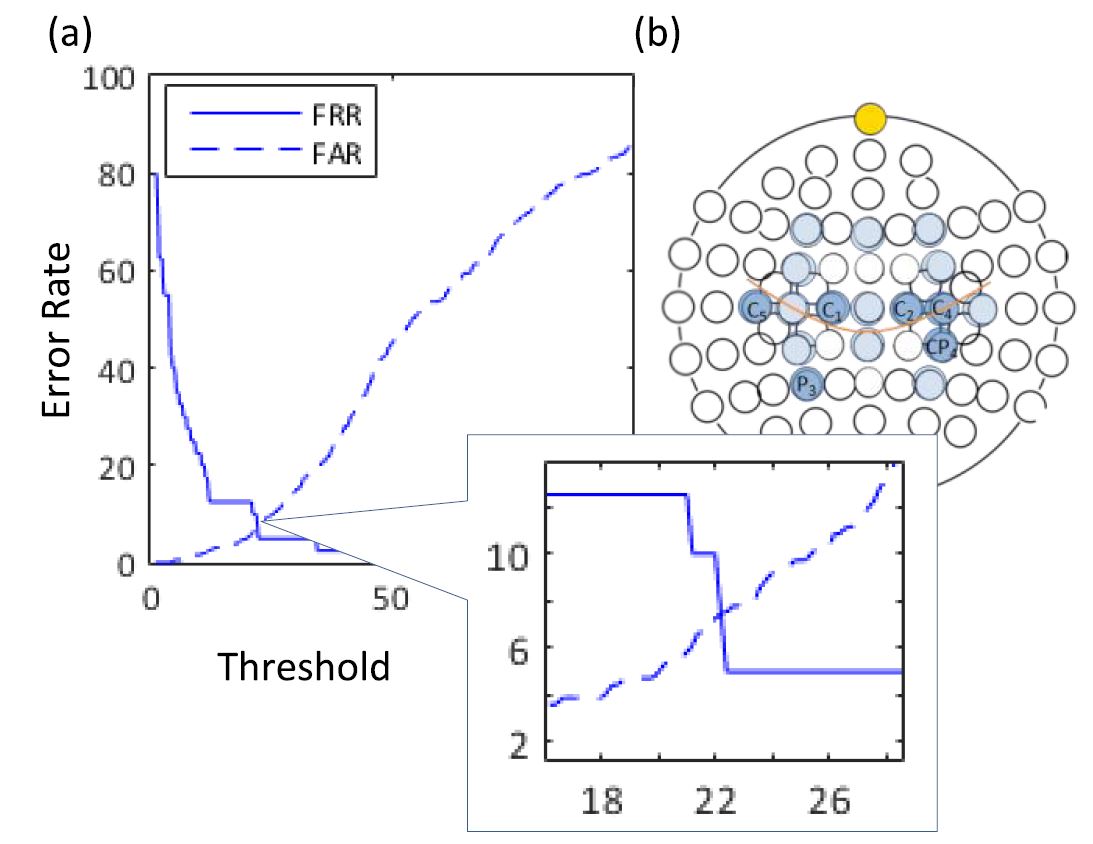

Grasp Biometrics

Data and personal security is more important in the information age than ever before. Modern biometrics such as fingerprints, gait analysis, iris scanning, and palm scanning attempt to use biological information to identify an individual. In this research area, we take a novel approach by using hand grasping as a biometric. Hand grasping is a very complex process which is shaped by an individual’s physiology, cognitive processes, and history of motor learning. The motion patterns found when an individual grabs an object are unique, and hold potential to serve as an identifiable marker for an individual. We are using our extensive experience with grasp kinematics and neuromuscular biosignals to create algorithms and technologies that lead to more secure biometrics.